AI Screening Systems Face Fresh Scrutiny: 6 Key Takeaways From Claims Filed Against Hiring Technology Company

Insights

3.27.25

A Deaf, Indigenous woman claims an employer’s use of a popular automated video interview platform unfairly blocked her promotion due to AI-driven biases related to her disability and race. The ACLU filed charges on March 19 on the woman’s behalf with the Colorado Civil Rights Division and the EEOC against her employer (Intuit) and the tech company she claims used AI in a discriminatory manner (HireVue). With multiple ongoing lawsuits challenging similar systems, employers relying on AI tools for hiring and other workplace uses are facing increased risk. What does the case mean for employers? Here are six critical takeaways.

Promotion Denied: Allegations of AI Bias Against Deaf Employee

The following allegations are taken from the public filings submitted by the ACLU on behalf of the employee, and thus tell only one side of the story. The employer and the HR tech company will have a full opportunity to present their side of the story and have their day in court, but until then, take these allegations with a grain of salt.

An Indigenous Deaf employee – named simply as “D.K.” in public filings – says she had an extensive record of high performance at Intuit, a multinational financial software company recognized for popular products like TurboTax, QuickBooks, and Mint. She said she applied for a promotion to Seasonal Manager, which required her to complete an automated video interview.

She alleges that this process was administered by HireVue, a prominent HR technology company that provides AI-powered hiring and assessment tools widely used by businesses for screening candidates and streamlining recruitment processes. Despite proactively alerting Intuit to accessibility issues with HireVue’s platform – specifically she claims it lacks the ability to offer accurate captioning for applicants – D.K. said that her request for human-generated captioning (Communication Access Realtime Translation) was denied.

Instead, D.K. alleges she was forced to rely on less accurate automated captions during the interview, claiming her comprehension and performance were negatively affected. Subsequent feedback she contends to have received from HireVue’s automated analysis criticized her communication abilities, which she asserts are directly attributable to these accessibility barriers.

Key Legal Issues

The central legal challenge focuses on employers’ and vendors’ obligations to ensure AI hiring tools do not unfairly impact individuals, due to their disability status or racial characteristics, particularly when explicit concerns regarding accessibility and bias are raised. The complaint alleges violations of:

- Americans with Disabilities Act (ADA) – D.K. claims that Intuit’s failure to accommodate her specific accessibility needs, by denying CART captioning, constitutes discrimination based on disability, directly disadvantaging her during the interview.

- Title VII of the Civil Rights Act of 1964 – She also claims that HireVue’s AI tools discriminated against her due to her Indigenous ethnicity, as she alleges that automated speech recognition systems used within the platform often misinterpret or inaccurately evaluate speech patterns of non-white, accented speakers.

- Colorado Anti-Discrimination Act (CADA) – D.K. also cites Colorado’s AI Consumer Protection Act that provides additional protections for job applicants and employees against discrimination based on both disability and race, which reinforces federal claims and broadens the scope for accountability.

How Intuit and HireVue Responded

According to Bloomberg Law (subscription required), Intuit denies the allegations entirely, stating it provides reasonable accommodations to all applicants. HireVue also rejects the claims, saying that Intuit didn’t even use an AI-backed assessment in this particular hiring process.

What’s Next?

As this claim progresses, the EEOC and Colorado Civil Rights Division will first investigate the allegations to determine if there is sufficient/probable cause to support a finding of discrimination.

- If cause is found, the agencies can require the parties to conciliate/mediate may attempt to conciliate a settlement between the parties.

- Should conciliation/mediation fail or if the agencies find sufficient/probable cause of wrongdoing, the claimant may file a complaint in federal or state court within 90 days of receiving a right to sue letter from these agencies.

- But D.K. will also have an opportunity to voluntarily withdraw her claims before any decision is reached, or even after a no-cause finding is made by these agencies, D,K. can still request a right to sue letter.

We’ll monitor the situation and provide updates as necessary.

Why This Litigation Matters to Your Organization

This latest filing highlights a critical and rapidly evolving intersection between advanced AI hiring technologies and established anti-discrimination legal frameworks. As employers adopt sophisticated AI-driven hiring and assessment systems, they face potential legal risks if these tools inadvertently or systematically disadvantage protected groups (such as individuals with disabilities or those from diverse backgrounds).

Other AI Litigation We’re Following

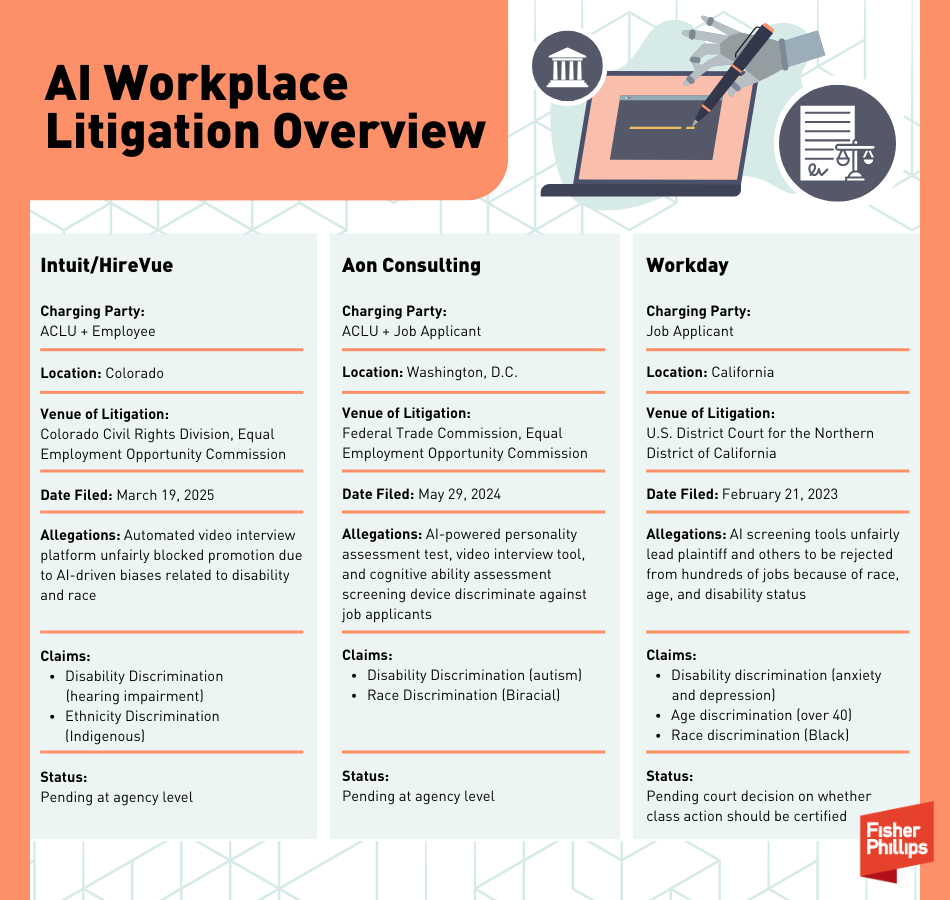

This isn’t the first piece of AI-related workplace litigation to be filed – and it won’t be the last. We’re also tracking two other critical cases that are currently pending:

- Workday Lawsuit: Allegations of discrimination against Black, older, and disabled applicants due to algorithmic screening practices are pending in a California court. And the plaintiff recently filed a motion to transform his case into a national class action.

- Aon Litigation: The ACLU filed an earlier complaint with the FTC against the maker of another AI screening tool, claiming its AI personality tests have a discriminatory impact against applicants with disabilities and those with certain racial backgrounds.

6 Steps Employers Should Take Now

Given the increasing number of claims we’ve been seeing – and the impending rise in AI-related litigation we expect to see in the near future – employers using AI hiring tools should consider taking the following six steps:

1. Conduct Accessibility Audits: Regularly test your systems to ensure effective accommodation for candidates with disabilities.

2. Review Vendor Agreements: Ensure third-party AI vendors commit explicitly to bias-free and accessible solutions. Here is a list of questions you should consider asking your AI vendors before deploying new technology in your workplace.

3. Train HR Teams: Educate staff about potential AI biases and the legal requirements for reasonable accommodations.

4. Allow for Manual Review: Allow applicants to request a human reviewer as a reasonable accommodation during the interview process.

5. Offer Clear Accommodation Pathways: Establish visible, easy-to-use processes for applicants needing accommodations.

6. Monitor and Adjust AI Usage: Continually review data and outcomes to detect, mitigate, and document efforts to address potential biases. Check out our step-by-step guide to developing an AI governance program here.

Conclusion

We will continue to provide the most up-to-date information on AI-related developments, so make sure you are subscribed to Fisher Phillips’ Insight System. If you have questions, contact your Fisher Phillips attorney, the authors of this Insight, or any attorney in our AI, Data, and Analytics Practice Group.

Related People

-

- Vance O. Knapp

- Partner

-

- Karen L. Odash

- Associate